Opportunistic Controls

|

|

Columbia University |

|

Opportunistic Controls |

|

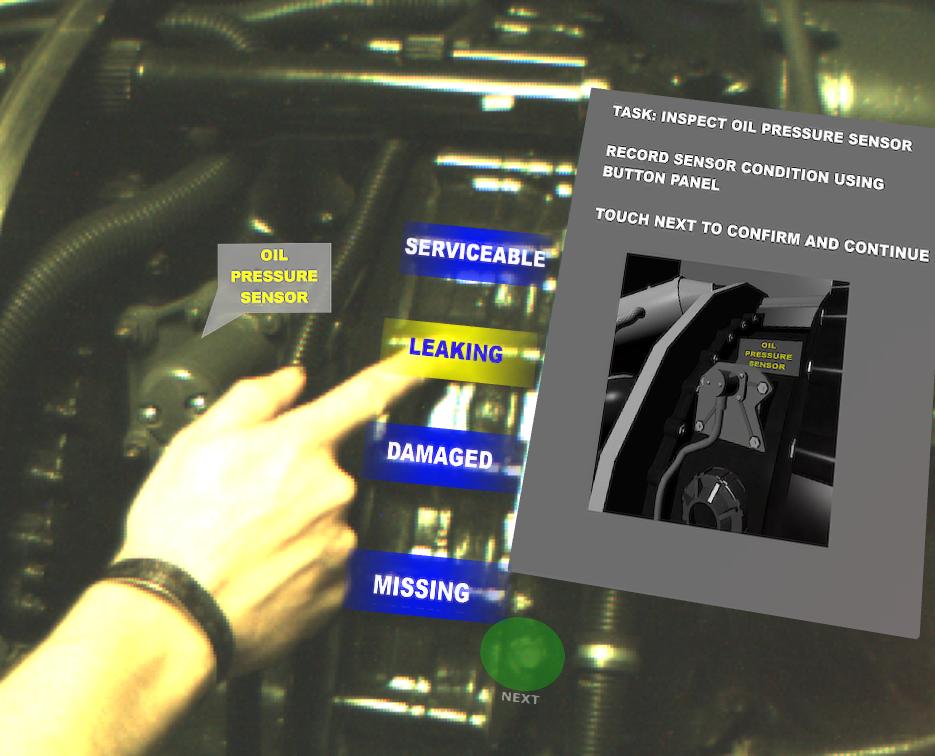

Opportunistic Controls are a class of user interaction techniques we have developed for augmented reality (AR) applications to support gesturing on, and receiving feedback from, otherwise unused affordances already present in the domain environment. By leveraging characteristics of these affordances to provide passive haptics that ease gesture input, Opportunistic Controls simplify gesture recognition, and provide tangible feedback to the user. In this approach, 3D widgets are tightly coupled with affordances to provide visual feedback and hints about the functionality of the control. For example, a set of buttons can be mapped to existing tactile features on domain objects. We have created examples of Opportunistic Controls which use optical marker tracking, combined with appearance-based gesture recognition. We have explored Opportunistic Controls during two user studies. In the first, participants performed a simulated maintenance inspection of an aircraft engine using a set of virtual buttons implemented both as Opportunistic Controls and using simpler passive haptics. Opportunistic Controls allowed participants to complete their tasks significantly faster and were preferred over the baseline technique. In the second, participants proposed and demonstrated user interfaces incorporating Opportunistic Controls for two domains, allowing us to gain additional insights into how user interfaces featuring Opportunistic Controls might be designed.

|

A user manipulates a virtual button while receiving haptic feedback from the raised geometry of the underlying engine housing. |

|

Steve Henderson, Steve Feiner, Opportunistic Tangible User Interfaces for Augmented Reality, Transactions on Visualization and Computer Graphics (accepted).

Steve Henderson, Steven Feiner, "Opportunistic Controls: Leveraging Natural Affordances as Tangible User Interfaces for Augmented Reality", ACM Virtual Reality Software and Technology (VRST) 2008, pp. 211-218, Oct 2008. (pdf)

|

|

||

This research was funded in part by DAFAFRL Grant FA8650-05-2-6647 and ONR Grant N00014-04-1-0005, and a generous gift from NVIDIA. We also thank Bengt-Olaf Schneider of NVIDIA for providing the StereoBLT SDK used to support the display of stereo camera imagery

Back to the Columbia CGUI Lab home page.

Please send comments to Steve Henderson